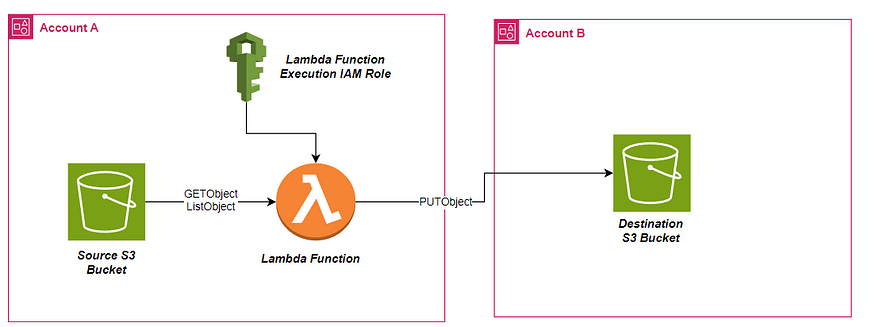

Cross-Account S3 Data Transfer Using Lambda Function

In this blog, we will learn on how we can securely transfer data from Account A S3 bucket(Source) to Account B S3 bucket(Destination). We will create a Lambda function, deploy the lambda code, IAM Role with least privilege access, and add the bucket policy.

Steps to Setup Data Transfer Between Cross Account S3 buckets

Create the source and destination Amazon S3 buckets.

Create the Lambda function IAM role and policy.

Create the Lambda function.

Add Lambda Function Environment variables

Create an Amazon S3 trigger for the Lambda function

Update the bucket policy

Lastly, Test the data transfer.

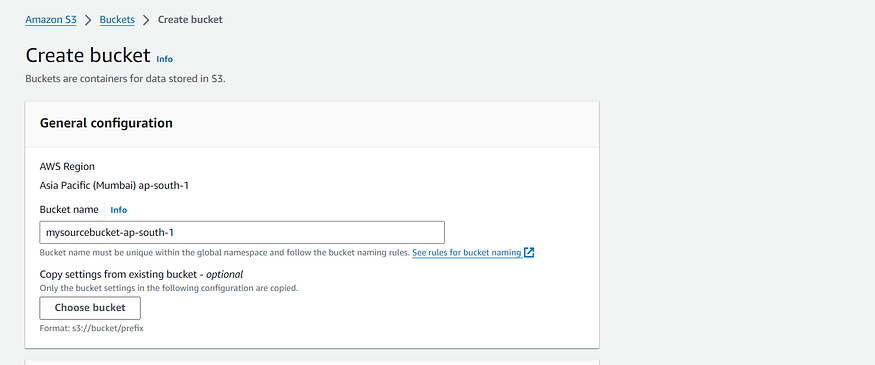

Step 1:- Create the source and destination Amazon S3 buckets

Note: If you already created the source and destination S3 buckets, then skip this step.

Open the Amazon S3 console.

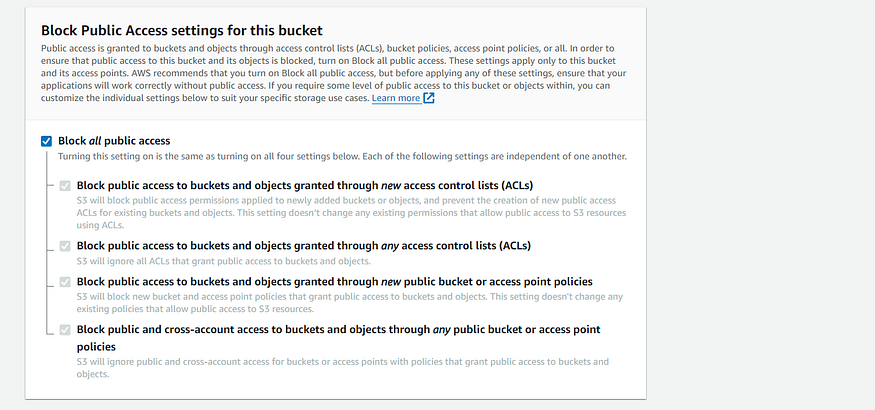

Choose Create bucket. Enter the source bucket name. Make sure you create the S3 bucket in same region where your destination bucket will exist.

3. Leave everything by default the make sure the public access should be blocked. Then, click on Create bucket.

4. Repeat steps 1–3 in your destination account for creating the S3 bucket.

For more information on how to create S3 bucket, see Creating a bucket.

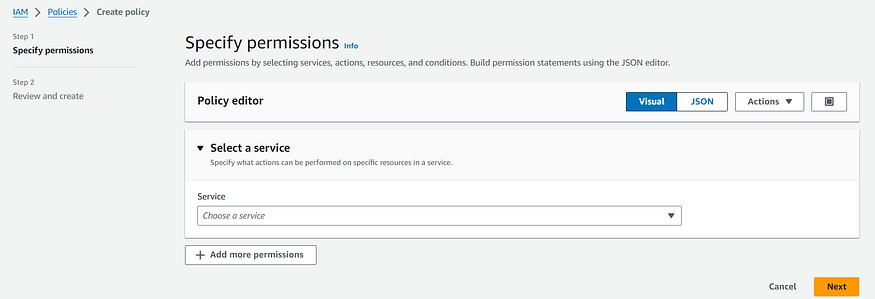

Step 2:- Create the Lambda function IAM role and policy.

The Lambda function will be created in the Account A where the source bucket exist including the Lambda IAM role and Policy.

- Open the IAM console click on Policy. Create Policy. Select Json editor.

2. Add the below JSON policy in the Editor. Make sure you update the source and destination bucket ARN in the policy below.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::change-to-source-bucket-arn", # Change the Bucket ARN to Source Bucket ARN

"arn:aws:s3:::change-to-source-bucket-arn*" # Change the Bucket ARN to Source Bucket ARN

]

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::change-to-destination-bucket-arn", # Change the Bucket ARN to DestinationBucket ARN

"arn:aws:s3:::change-to-destination-bucket-arn/*" # Change the Bucket ARN to DestinationBucket ARN

]

}

]

}

The above policy will grant access to source S3 bucket GET and LIST permission and PUT permission to the destination bucket which means this policy can only list and fetch the objects in the source s3 bucket whereas in destination S3 bucket it will only push the object in the bucket.

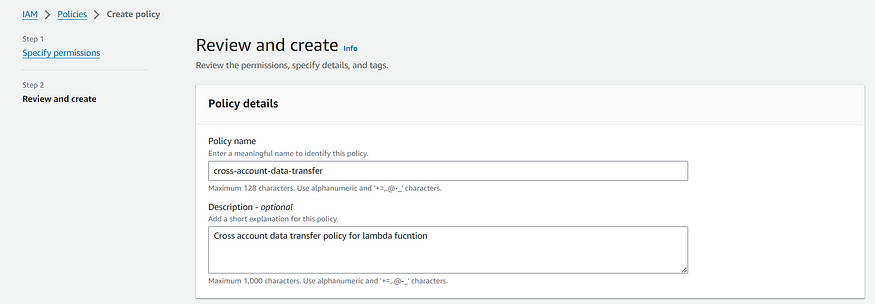

3. Now, Provide the policy name and Click on Create Policy.

After creating the lambda policy. Now, Let’s create the lambda function IAM role where we will attach this created Lambda policy.

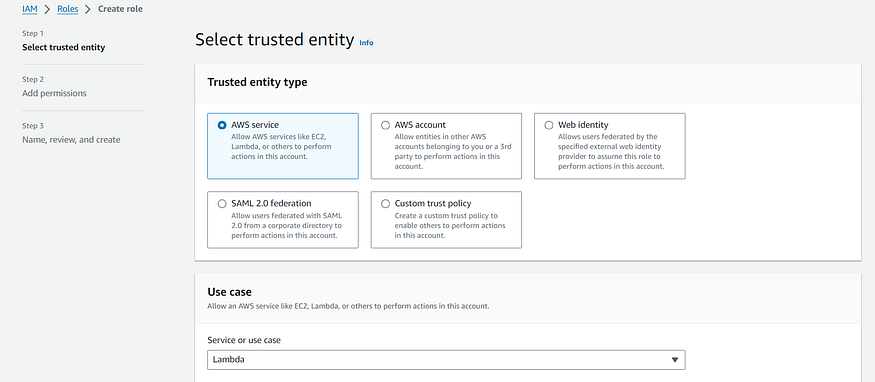

4. Open the IAM console click on Role. Create Role. In Trusted entity type select AWS Service and in service choose Lambda.

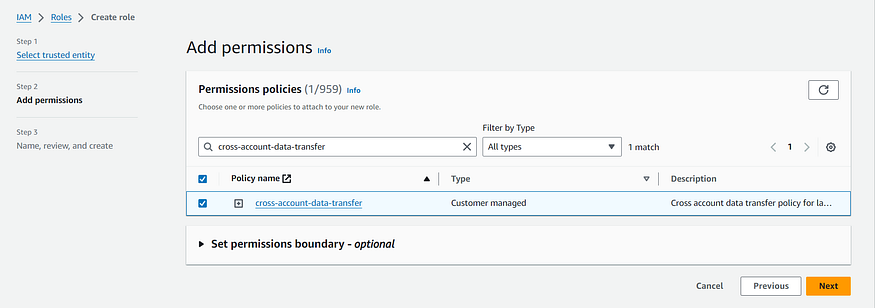

5. In the next step select the IAM policy that we have created in the previous step and click Next.

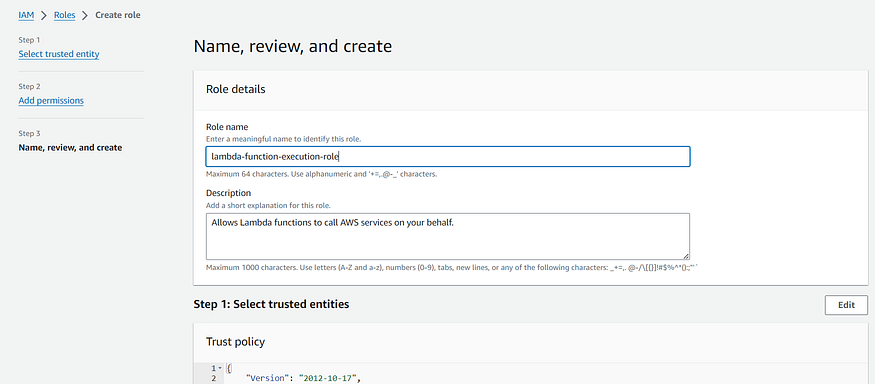

6. Now, Add the IAM role name and make sure you do not edit the Trusted entity leave everything default and click on create.

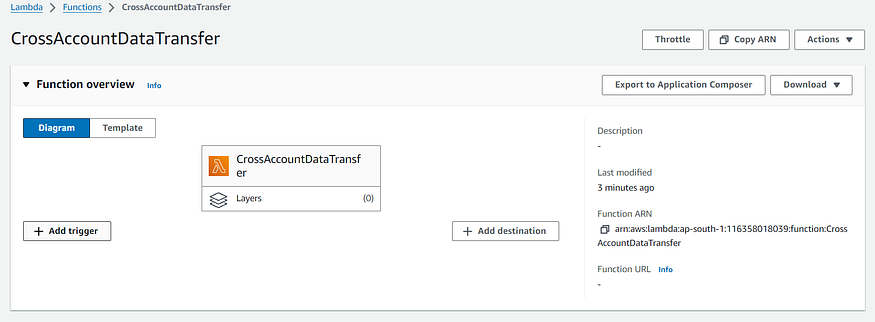

Step 3:- Create the Lambda function

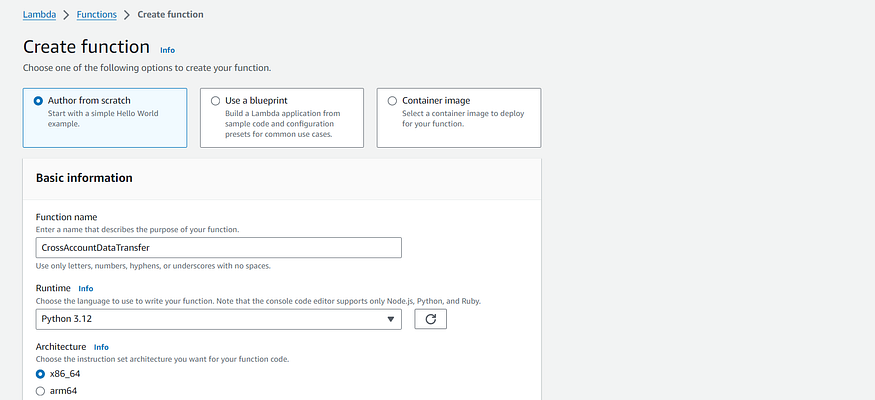

- Open the Functions page in the Lambda console. Choose Create function, and then, choose Author from scratch. For Function name, enter a name for your function. From the Runtime dropdown list, choose Python 3.12.

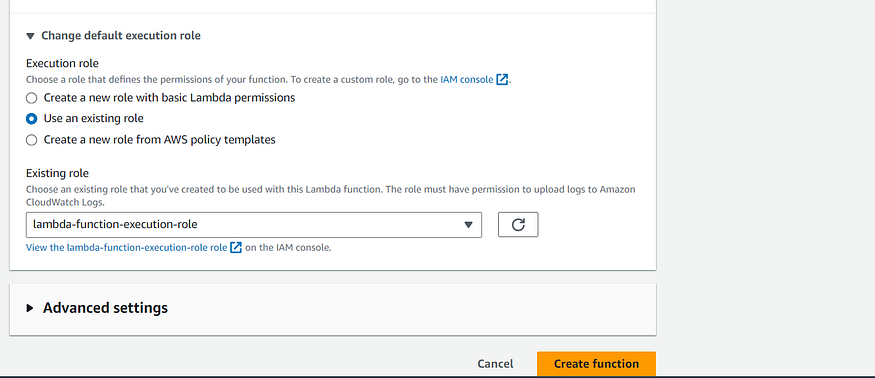

2. Expand Change default execution role, under permissions and then choose Existing Role. Select the Role name that we have create in the previous step and click Create Function.

3. Choose the Code tab, and copy paste the below Python code and Click on Deploy.

import boto3

import hashlib

import os

import urllib.parse

s3_client = boto3.client('s3')

def get_md5_checksum(bucket, key):

""" Calculate MD5 checksum of an S3 object """

try:

response = s3_client.get_object(Bucket=bucket, Key=key)

file_data = response['Body'].read()

return hashlib.md5(file_data).hexdigest()

except s3_client.exceptions.NoSuchKey:

print(f"Error: The object with key '{key}' does not exist in bucket '{bucket}'")

raise

def lambda_handler(event, context):

# Source S3 Bucket

source_bucket = os.environ['SOURCE_BUCKET']

# Loop through the records in the event

for record in event['Records']:

# Get the S3 object key from the event record (automatically provided by S3)

source_key = record['s3']['object']['key']

# URL-decode the key in case of special characters or encoding issues

source_key = urllib.parse.unquote_plus(source_key)

print(f"Processing file: {source_key} from bucket: {source_bucket}")

# Destination S3 Bucket (read from environment variables)

dest_bucket = os.environ['DEST_BUCKET']

# Copy the file with the same key to the destination bucket

dest_key = source_key

# Copy the object to the destination bucket

try:

copy_source = {'Bucket': source_bucket, 'Key': source_key}

s3_client.copy_object(CopySource=copy_source, Bucket=dest_bucket, Key=dest_key)

print(f"File transferred from {source_bucket}/{source_key} to {dest_bucket}/{dest_key}")

except Exception as e:

print(f"Failed to copy object {source_key} to {dest_bucket}: {e}")

continue

return {

'statusCode': 200,

'body': 'File transfer successfully completed.'

}

Step 4:- Add Lambda Function Environment variables

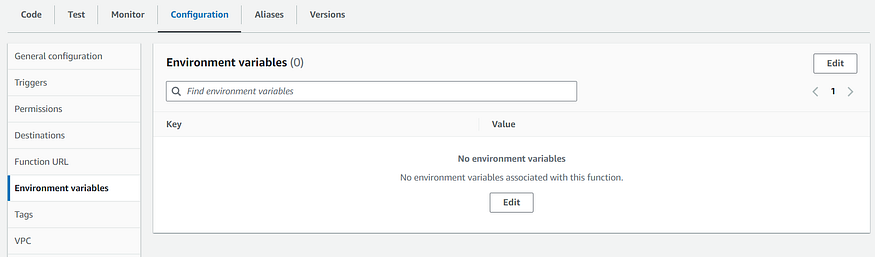

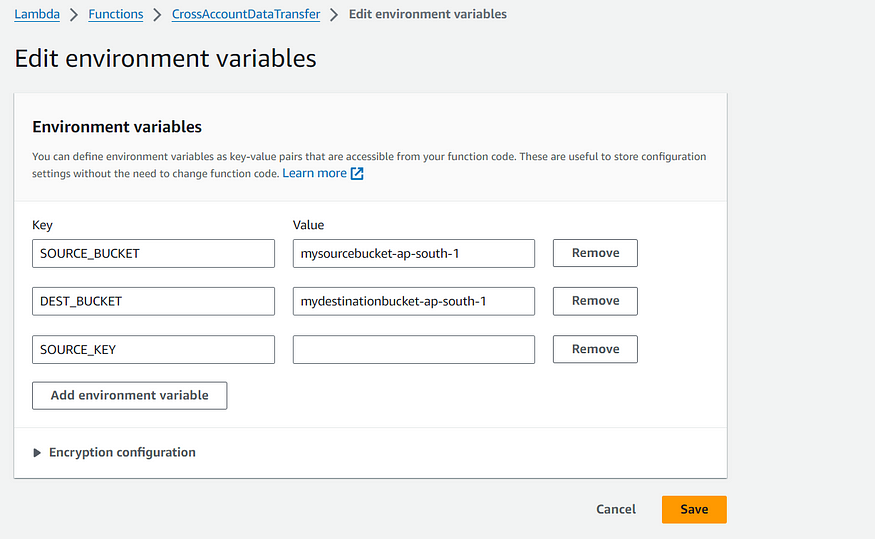

- In the Lambda Function Configuration Section, Select the Environment variable tab on the left and Click on edit to add the environment variables.

2. Add Environment variables.

Replace the SOURCE_BUCKET value with your source bucket name, DEST_BUCKET value with your destination bucket name and leave the SOURCE_KEY value blank.

Note: The key name should be same as mentioned in the blog and value will be changes according to your source and destination bucket name.

After adding the environment variable click on Save.

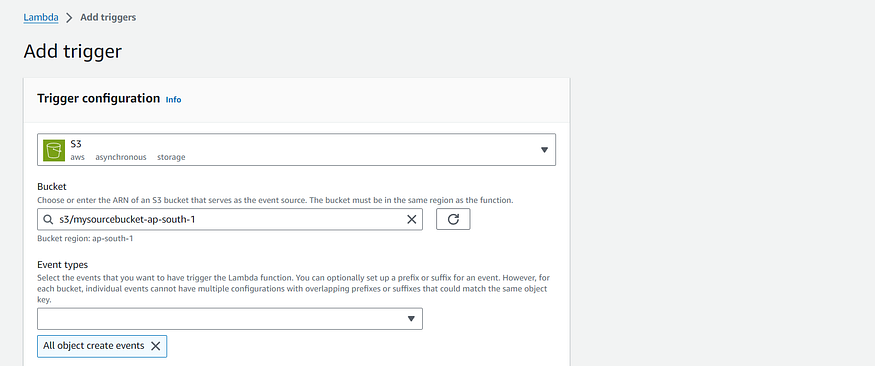

Step 5:- Create an Amazon S3 trigger for the Lambda function

- In Function overview, choose Add trigger.

2. From the Trigger configuration dropdown list, choose S3. In Bucket, enter the name of your source bucket. From the Event type dropdown list, choose All object create events.

2. Select the I acknowledge that using the same S3 bucket for both input and output is not recommended agreement, and then choose Add.

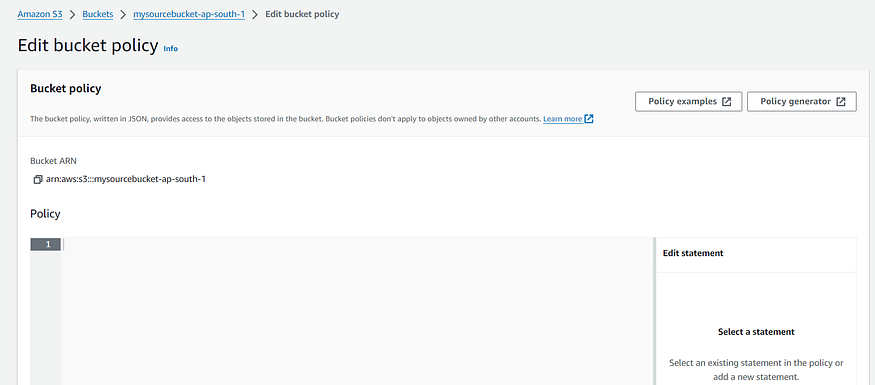

Step 6:- Update the bucket policy.

Now, Update both the source and destination bucket policy to make sure lambda function have the permission to access the buckets.

Go to Source bucket in Account A.

Open the permission tab and edit the bucket policy.

3. Add the below policy to the source S3 bucket in Account A.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::XXXXXXXXXX:role/lambda_exec_role" # Replace it with Create lambda function role arn.

},

"Action": [

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::change-to-source-bucket-arn", # Change the Bucket ARN to Source Bucket ARN in Account A

"arn:aws:s3:::change-to-source-bucket-arn/*" # Change the Bucket ARN to Source Bucket ARN in Account A

]

}

]

}

Note:- Make sure you replace the lambda function role arn with the Role we have created in the previous step and change the bucket arn to same bucket were we are adding the bucket policy.

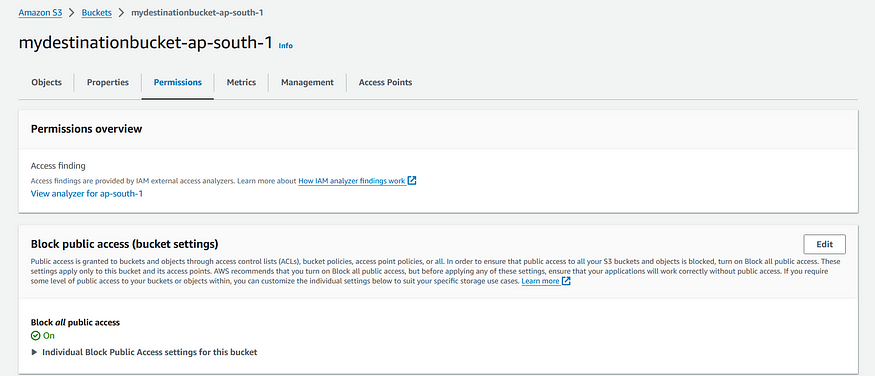

Now, Update the bucket policy in Account B destination bucket.

Open the permission tab and edit the bucket policy.

3. Add the below policy to the destination S3 bucket in Account B.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::XXXXXXXXXX:role/lambda_exec_role" # Replace it with Create lambda function role arn.

},

"Action": [

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::change-to-destination-bucket-arn", # Change the Bucket ARN to Destination Bucket ARN in Account B

"arn:aws:s3:::change-to-destination-bucket-arn/*" # Change the Bucket ARN to Destination Bucket ARN in Account B

]

}

]

}

Note:- Make sure you replace the lambda function role arn with the Role we have created in the previous step in Account A and change the bucket arn to same bucket were we are adding the bucket policy.

After that click on Save.

Now, Lastly to validate the data transfer between Account A S3 bucket (source) and Account B S3 bucket (destination) upload the objects in source bucket in Account A to validate the data transfer in destination bucket in Account B.

Conclusion:

By following these steps you will be able to create a Lambda function that will copy files from a source Amazon S3 bucket to a destination S3 bucket. You will learn how to add the trigger in lambda function, create bucket policy, IAM role with least privilege access policy for lambda function accessing the cross account S3 bucket.

Follow-up

If you enjoy reading and would like to read more in the future. Please subscribe here and connect with me on LinkedIn.

You can buy me a coffee too🤎🤎🤎